Low emission building control with zero-shot reinforcement learning

Heating and cooling systems in buildings account for 31% of global energy use.

Many of these systems are regulated by Rule Based Controllers (RBCs) that neither maximise energy efficiency nor minimise emissions by interacting optimally with the grid.

Control via Reinforcement Learning (RL) has been shown to significantly improve building energy efficiency. However, existing solutions require access to building-specific simulators and it is not realistic to compile specific data for every building in the world.

In response, we show it is possible to obtain emission-reducing policies without such knowledge a priori – a paradigm we call zero-shot building control. We combine ideas from system identification and model-based RL to create PEARL: Probabilistic Emission-Abating Reinforcement Learning. We also show that a short period of active exploration is all that is required to build a working model.

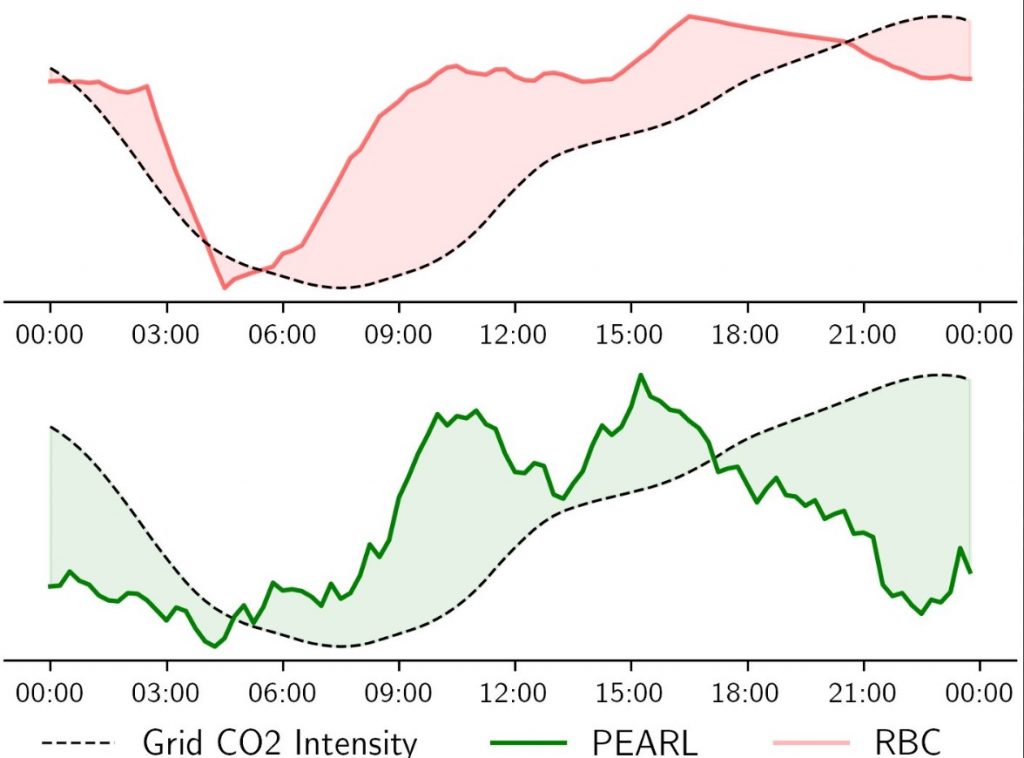

In experiments across three varied building energy simulations, we show that PEARL outperforms an existing RBC once, and popular RL baselines in all cases, reducing building emissions by as much as 31% whilst maintaining thermal comfort.

PEARL: Probabilistic Emission-Abating Reinforcement Learning

Presenting PEARL: Probabilistic Emission-Abating Reinforcement Learning, a deep RL algorithm that can find performant building control policies online, without pre-training. This is the first time this has been shown to be possible. We show that PEARL can reduce annual emissions by 31% when compared with a conventional controller whilst maintaining thermal comfort. PEARL outperforms all RL baselines used for comparison. PEARL is simple to commission, requiring no historical data or simulator access in advance, paving a path towards general solutions that could control any building. The scaled deployment of such low emission building control systems systems could prove a cost-effective method for tackling climate change.

The graph below shows how PEARL shifts load to times of day when grid carbon intensity is lowest:

Read the paper from Scott Jeen, Alessandro Abate, and Jonathan Cullen: Low emission building control with zero-shot reinforcement learning.

View the code on GitHub.

Find out more about Scott’s research on his website.

Photo credit: Max Bender